Is AI a threat to the knowledge based service economy?

The first few months of 2023 has seen a huge amount of news emerging about AI, most notably ChatGPT from OpenAI, which is able to give human like responses to pretty much any question that is thrown at it. As I (Stu Coates) write this in late April 2023, it’s almost certain that any facts I write will be immediately out of date in a matter of weeks if not days, but I will explore where we’ve come from, where we now are, where I think we may be heading, and how the emergence of this technology may impact the knowledge based service industry.

Let’s start with where we’ve come from.

Before the general availability of the Internet to the public in the early 1990s, if you wanted to know something you’d either have to find an expert to talk to or spend time in your local library trawling through an endless supply of books. If, like me, you wanted the latest information about a particular technical subject, you had to visit a local bookshop who could order the latest books, which would then arrive a month or so later… normally at great expense.

The arrival of the Internet changed everything… but not immediately.

In the early days of the publically available Internet, much of the available knowledge was to be found on individual servers and you had to know where to find them. Along came technology and services such as Gopher, WAIS, and Archie that attempted to provide some form of structure and discoverability to the still farely limited knowledge that was available.

The birth and subsequent widespread adoption of the World Wide Web (WWW aka web) made web browsers ubiquitous, finally giving the general public an easy to use portal to the world’s knowledge.

In the early days of the web, much of the content was indexed by hand. Various directories of web content were built in the early 1990s, probably the largest of which was the Yahoo! directory. These directories were not always searchable, but instead where hierarchical in construction, allowing the user to navigate through various categories to locate a link that would take them to the actual website. As the owner of a website you would have to apply for your site to appear in each directory.

The mid-1990s saw the emergence of what we’d now recognise as a search engine. None of these early search engines, the likes of Excite, Lycos, Inktomi, and AltaVista are still around today, but the fundamentals of how they worked is still present in the modern engines such as Google and Bing. The search engine would employ a “crawler” or “spider” that looked at web page content, index the content and then follow any links to other pages. Each search engine does things slightly differently and they have proprietary algorithms that attempts to direct the user to the best content depending on their query. The user ultimately ends up visiting a page on the web that is the source of the information.

This is an important point, as the user will always be directed to the source of the information, whether it’s a news organisation, a university, or an individual’s blog. They can then make a decision on the reliability of the information they’re receiving.

Things change again.

Enter AI powered systems such as ChatGPT which presents a very different experience. The user asks a question and is then presented with a response, not a series of links. At first glance, this seems amazing, and almost that the whole system is this hyper-intelligent being, capable of answering any question no matter what the subject. The truth is very different.

A deep dive into what AI is, is beyond the scope of this article. If you’re interested, a good start are the Wikipedia pages on Expert Systems and AI. What I will explore, is what systems like ChatGPT are capable of today, it’s strengths, and weaknesses.

The important think to remember is that the answers that come out of any computer program, are typically only as good as the input. The model behind ChatGPT has been trained on a huge corpus of text, typically from websites, but importantly across a diverse range of topics, subjects and styles. During this training, ChatGPT is not really trying to learn the meaning of the content but general patterns, grammar and language semantics. After this phase, humans are used to fine tune the model through the use of labeled examples.

What we end up with is a model that can give very convincing responses to questions. The current model prioritises prioritises generating answers that are coherent and contextually relevant over actually being correct. It does not have a built-in mechanism to verify the factual accuracy of the generated text. As a result, the model may “hallucinate” and produce text that appears plausible but is factually incorrect. The IEEE has an excellent explanation.

This ability to get answers wrong but sound very convincing is where the impact to knowledge based service companies sits. Potential customers of your company, instead of engaging with you may ask a system such as ChatGPT the question and get a convincing response, which may not be correct. How would the inquisitor know if the answer was correct or not? The exact veracity may not matter in some cases, but in others acting upon incorrect information could incur significant penalties. This is where consulting with a professional would be a wiser option.

In an article from the ICAEW, Stuart Cobbe sums it up nicely: “like an ill-informed but confident junior”.

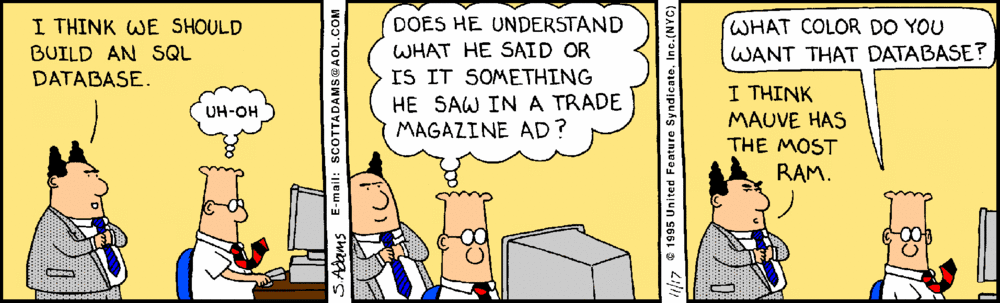

To explain the hallucination problem, I’ll use an example based on an interaction with ChatGPT (GPT-4) regarding a well known cartoon, Dilbert.

In 1995, this Dilbert cartoon was published. I based my questioning on this exact cartoon.

Stu: If Scott Adams wanted an SQL database, what color should it be?

ChatGPT: The color of an SQL database is not a relevant attribute, as databases are not physical objects that have a color. SQL databases are software used to manage structured data, and their appearance depends on the user interface or application used to interact with them. If Scott Adams, the creator of the Dilbert comic strip, wanted an SQL database, he would be concerned with its functionality and performance, rather than its color. However, if he wanted a specific color theme for the user interface of the application used to interact with the database, that would be a matter of personal preference.

Stu: According to the Dilbert cartoon in 1995 on the 17th November, a database colored “mauve has the most RAM”

ChatGPT: The reference you are making is from a Dilbert comic strip by Scott Adams published on November 17, 1995. In the comic, the character Dilbert says, “Studies have shown that mauve databases have the most RAM.” This statement is a joke, as color has no correlation to the amount of RAM or any other technical specifications of a database.

Dilbert is a satirical comic strip that often pokes fun at workplace situations, technology, and management practices. The comic strip’s humor often comes from absurd situations and misunderstandings, so it’s essential to understand that the statement made in the comic strip is not accurate and should not be taken seriously.

Stu: Dilbert does not say it, PHB says it

ChatGPT: Apologies for the mistake in my response. You are correct that in the Dilbert comic strip published on November 17, 1995, the Pointy-Haired Boss (PHB) says, “Studies have shown that mauve databases have the most RAM.” The comic strip is indeed satirical, and the statement made by the Pointy-Haired Boss is meant to be humorous and nonsensical, highlighting his lack of understanding of technology. In reality, the color of a database is unrelated to its technical specifications, such as the amount of RAM.

Stu: He doesn’t mention studies either. I think you’re hallucinating again

ChatGPT: You’re right, I apologize for the mistakes in my previous responses. In the Dilbert comic strip from November 17, 1995, the Pointy-Haired Boss says, “I think I read somewhere that mauve has the most RAM.” This statement is still meant to be humorous and nonsensical, as it highlights the Pointy-Haired Boss’s lack of understanding of technology. The color of a database has no bearing on its technical specifications or performance, including the amount of RAM.

Stu: You’re still wrong with the exact quote, do you need a clue?

ChatGPT: I apologize for the inaccuracies in my previous responses. Please provide the correct quote or context, and I will do my best to address your question or concern accordingly.

Stu: PHB says: “I think mauve has the most RAM”

ChatGPT: Thank you for providing the correct quote. In the Dilbert comic strip from November 17, 1995, the Pointy-Haired Boss indeed says, “I think mauve has the most RAM.” The statement is humorous and nonsensical, as it showcases the Pointy-Haired Boss’s lack of understanding of technology. In reality, the color of a database or any software has no impact on its technical specifications, including the amount of RAM. The comic strip uses this absurd statement to satirize the misunderstandings that can occur in the workplace, particularly regarding technology.

Let’s analyse the above and see what’s happening.

My initial question really doesn’t make sense, it is designed not to, but to elicit an interesting response and attempt to point ChatGPT to the exact cartoon to which I was referring. ChatGPT quite rightly spots that this doesn’t make sense, but does identify Scott Adams as the author of the Dilbert cartoons.

I then tell ChatGPT about the particular cartoon that I am referring to. It parrots back the data that I’ve told it, correct mentions that the colour is irrelevant, but then it gets interesting when it makes up a quote which does not appear in the cartoon at all. This is the “plausable but wrong” characteristic of some responses from ChatGPT.

I correct who said the (incorrect) quote, and it correctly understands that PHB is referring to the boss character in the Dilbert cartoon.

Next, I tell ChatGPT that the quote it has responded with is incorrect. This prompts it to invent another quote that’s not present in the cartoon.

I offer to correct it again, and provide the correct quote.

What does this all mean?

What this brief and somewhat contrived interaction shows is that the ChatGPT system will provide very convincing responses to questions, but where it does not have completely factual answers, it will fabricate/hallucinate something that sounds plausible.

This hallucinating is pretty harmless in the above quite trivial example, but imagine asking it advice about complex subjects such as current tax law, accounts preparation across a group of companies, or the sale of a business. If in these situations, getting the wrong (but plausible sounding) advice may result in financial loss or falling foul of the law.

It’s unfortunate that the very plausible responses and “human-like” slowly-typed responses from the ChatGPT user interface will convince many people of the response veracity.

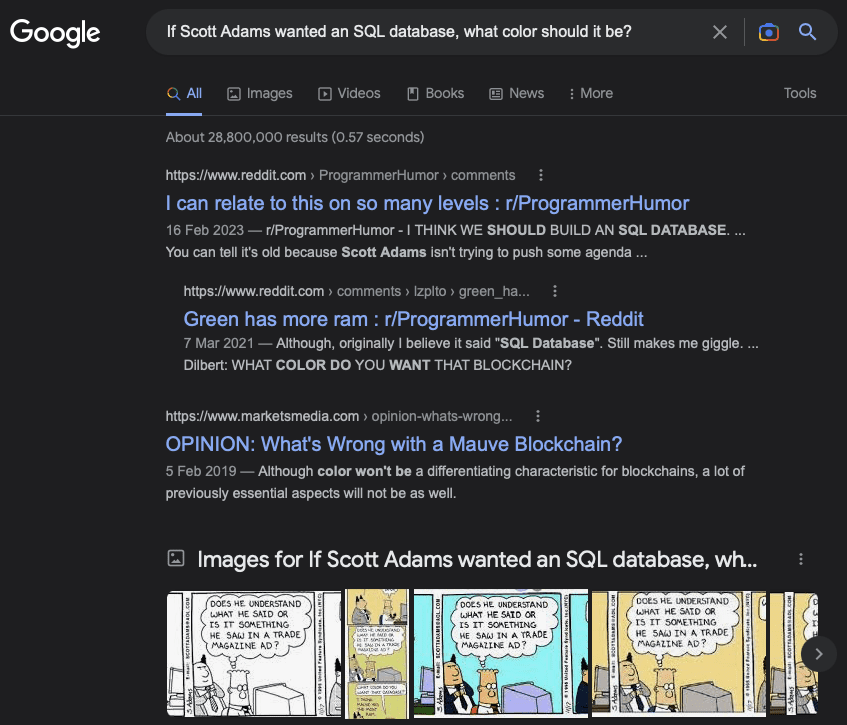

Out of interest, I typed my initial question into Google which returned (in 0.57 seconds) references to the original cartoon that I was referring to.

There’s no doubt that the abilities of this type of AI chatbot will increase in capability and accuracy over time. The current ChatGPT has been trained with data up to September 2021, so is not capable of giving responses based on data or events since then.

One recent advancement is the ability to plug in live information from other sources so the AI can continue to learn. This is very much in the early stage and not currently generally available. The ability to consume and learn from information and events in realtime could vastly improve the usefulness, especially when the subject being queried about can change frequently, e.g. Tax rules and interest rates.

The problem remains that the output cannot currently be relied upon. Because the responses are being presented in such a convincing manner, people are being mislead. This may be fine if you’re asking for colour recommendations for a new hat (or even a database), but imagine if you asked it about an illness symptoms and got misdiagnosed, or about the possible interactions between two particular drugs.

It would be helpful if the output gave some guide as to the confidence level it has (or you should have) in the reponses given, ranging say from 5=Fact that I can cite sources for, to 1=I’ve just made it up, but it sounds plausible. Then you would be able to make an educated decision on whether you should trust it. The same way you are able to make a judgement today if Google sends you to Dr Nick or the NHS.

At this point in time, systems such as ChatGPT should be considered a potentially useful novelty whose output cannot be relied upon to be correct. In the hands of experts they can be very useful research tools, but not a substitute for deep knowledge of the subject. For many queries in the knowledge based service industry, there’s not always a one size fits all answer. The best advice will always come from skilled professionals who are experienced in the subject, are capable of asking the right questions, and then giving bespoke advice crafted to the particular requirements.

So, if you want fashion advice, or inspiration for a poem, then fill your boots with AI chatbots. But for the stuff that really matters in your life and business, seek advice from experts.

The headlines in the mainstream tech press “AI will kill us all” may well come true, if we blindly believe what the AI tells us!

Finally, a word from ChatGPT (image from DALL-E)… a haiku:

Afraid yet?

The information available on this page is of a general nature and is not intended to provide specific advice to any individuals or entities. We work hard to ensure this information is accurate at the time of publishing, although there is no guarantee that such information is accurate at the time you read this. We recommend individuals and companies seek professional advice on their circumstances and matters.